Notes to self, 2011

2011-12-12 - mysql slow / queries / sample

Sometimes you're in a situation where you know that a database is more heavily loaded than it should be. Time to figure out which queries are stressing it the most.

The standard thing to do with a MySQL database would be to enable

query logging with general_log_file. Or, to get only slow

queries and those not using indexes, the log_slow_queries.

But, if this is a mission critical and heavily loaded database, adding expensive logging may be just enough to give it that final push to become overloaded.

Perhaps taking just a sample of queries is good enough too. In that case this script is for you:

$ sed -e '/This little/,/^import/!d' mysql-slow-queries-sample.py ; echo ...

# This little script can be used to take a sampling of queries that

# are run often and/or take a lot of time.

#

# Every time you run this, a single 'show processlist' is executed on

# the local mysqld. If any queries are seen, they're cleaned up a bit

# and printed to stdout. Run this as often as you feel comfortable

# with; if run often/long enough you'll get statistically significant

# data about which queries put the most load on your database.

#

# Example output sort:

# $ grep -v '^\[' output.txt | sed -e 's/^[^:]*: //' |

# sed -e 's/[0-9]\{5,\}/<NUMBER>/g' | sort | uniq -c | sort -n |

# tail -n5

#

import datetime, re, subprocess

...

Get the full script here: mysql-slow-queries-sample.py (view)

2011-12-11 - postgres / alter column / look closer

Just now, I tried to convert an integer column in a PostgreSQL database to one of type VARCHAR. I knew you had to do an explicit cast, so I was a bit stumped that I still wasn't allowed to perform the ALTER TABLE.

mydb=> ALTER TABLE mytable ALTER COLUMN mycolumn TYPE VARCHAR(31) USING mycolumn::text; ERROR: operator does not exist: character varying >= integer HINT: No operator matches the given name and argument type(s). You might need to add explicit type casts.

Wait what? A >= operator?

mydb=> \d mytable

...

Check constraints:

"mytable_mycolumn_check" CHECK (mycolumn >= 0)

...

In the Netherlands we call it looking further than one's nose is long.

mydb=> ALTER TABLE DROP CONSTRAINT mytable_mycolumn_check; ALTER TABLE mydb=> ALTER TABLE mytable ALTER COLUMN mycolumn TYPE VARCHAR(31) USING mycolumn::text; ALTER TABLE

Better..

2011-12-02 - fixing symptoms / not problems

Some people seem to think that fixing the symptom is fixing the problem.

import random

def return_one_of(list):

return list[random.randint(0, len(list))]

def say_something():

try:

print return_one_of(["Hello World!", "Hi!", "How you doin'?"])

except:

return say_something()

say_something()

Gah!

This is obviously an example, but there are people who do this and claim to have "fixed the problem".

Let me reiterate: the fact that your code does not raise any exceptions does NOT mean that it is not broken code!

2011-11-15 - django / mongodb / manage dbshell

The current django-mongodb-engine doesn't seem to ship with a working

manage dbshell command yet. Right now it returns this:

$ ./manage.py dbshell

...

File "/home/walter/.virtualenvs/myproject/lib/python2.6/site-packages/django/core/management/commands/dbshell.py", line 21, in handle

connection.client.runshell()

File "/home/walter/.virtualenvs/myproject/lib/python2.6/site-packages/django_mongodb_engine/base.py", line 108, in __getattr__

raise AttributeError(attr)

AttributeError: client

The fix is simple, patch your django_mongodb_engine with this:

--- django_mongodb_engine/base.py.orig 2011-11-15 11:53:47.000000000 +0100

+++ django_mongodb_engine/base.py 2011-11-15 11:54:07.000000000 +0100

@@ -7,6 +7,7 @@

from pymongo.connection import Connection

from pymongo.collection import Collection

+from .client import DatabaseClient

from .creation import DatabaseCreation

from .utils import CollectionDebugWrapper

@@ -87,6 +88,7 @@

self.features = DatabaseFeatures(self)

self.ops = DatabaseOperations(self)

self.creation = DatabaseCreation(self)

+ self.client = DatabaseClient(self)

self.introspection = DatabaseIntrospection(self)

self.validation = DatabaseValidation(self)

self.connected = False

--- /dev/null 2011-10-04 03:15:41.939918405 +0200

+++ django_mongodb_engine/client.py 2011-11-15 11:52:13.000000000 +0100

@@ -0,0 +1,15 @@

+import os

+import sys

+

+from django.db.backends import BaseDatabaseClient

+

+class DatabaseClient(BaseDatabaseClient):

+ executable_name = 'mongo'

+

+ def runshell(self):

+ settings_dict = self.connection.settings_dict

+ args = [self.executable_name, settings_dict['NAME']]

+ if os.name == 'nt':

+ sys.exit(os.system(" ".join(args)))

+ else:

+ os.execvp(self.executable_name, args)

This was tested with django 1.3 (wkornewald nonrel) and django-mongodb-engine 0.4.0.

2011-10-20 - certificate verify fail / crt / bundle

So. SSL certificates are still black magic to me. Especially when they cause trouble.

Like when one of the sysadmins has forgotten to add the certificate bundle to the

apache2 config.

Then you get stuff like this:

$ hg pull -u abort: error: _ssl.c:503: error:14090086:SSL routines:SSL3_GET_SERVER_CERTIFICATE:certificate verify failed

Most web browsers do not notice this as they already have the intermediate CA files,

but /etc/ssl/certs/ca-certificates.crt seemingly doesn't.

The problem in this case was not that I was missing any certificates locally. The problem was that the web server was not publishing the child certificates of which the server certificate was a grandchild.

openssl can tell you all this, if you know how:

$ echo quit | openssl s_client -connect code.osso.nl:443 | sed -e '1,/^Server certificate/!d' depth=0 OU = Domain Control Validated, OU = PositiveSSL Wildcard, CN = *.osso.nl verify error:num=20:unable to get local issuer certificate verify return:1 depth=0 OU = Domain Control Validated, OU = PositiveSSL Wildcard, CN = *.osso.nl verify error:num=27:certificate not trusted verify return:1 depth=0 OU = Domain Control Validated, OU = PositiveSSL Wildcard, CN = *.osso.nl verify error:num=21:unable to verify the first certificate verify return:1 CONNECTED(00000003) DONE --- Certificate chain 0 s:/OU=Domain Control Validated/OU=PositiveSSL Wildcard/CN=*.osso.nl i:/C=GB/ST=Greater Manchester/L=Salford/O=Comodo CA Limited/CN=PositiveSSL CA --- Server certificate

I had to add the bundle of CA files that reference each other: issuer is the next signee in the list.

For apache2 this bundle is supposed to be ordered bottom-up. So,

next to your SSLCertificateFile you should always have a

SSLCertificateChainFile pointing to the bundle of intermediates.

SSLCertificateChainFile /etc/ssl/certs/code_osso_nl_CA.pem

Now, the openssl connect output looks a lot better:

$ echo quit | openssl s_client -connect code.osso.nl:443 | sed -e '1,/^Server certificate/!d' depth=3 C = SE, O = AddTrust AB, OU = AddTrust External TTP Network, CN = AddTrust External CA Root verify error:num=19:self signed certificate in certificate chain verify return:0 CONNECTED(00000003) DONE --- Certificate chain 0 s:/OU=Domain Control Validated/OU=PositiveSSL Wildcard/CN=*.osso.nl i:/C=GB/ST=Greater Manchester/L=Salford/O=Comodo CA Limited/CN=PositiveSSL CA 1 s:/C=GB/ST=Greater Manchester/L=Salford/O=Comodo CA Limited/CN=PositiveSSL CA i:/C=US/ST=UT/L=Salt Lake City/O=The USERTRUST Network/OU=http://www.usertrust.com/CN=UTN-USERFirst-Hardware 2 s:/C=US/ST=UT/L=Salt Lake City/O=The USERTRUST Network/OU=http://www.usertrust.com/CN=UTN-USERFirst-Hardware i:/C=SE/O=AddTrust AB/OU=AddTrust External TTP Network/CN=AddTrust External CA Root 3 s:/C=SE/O=AddTrust AB/OU=AddTrust External TTP Network/CN=AddTrust External CA Root i:/C=SE/O=AddTrust AB/OU=AddTrust External TTP Network/CN=AddTrust External CA Root --- Server certificate

There's still one error left, but that's correct. We need to trust the root certificate.

Now the hg pull works again, like it should.

P.S. Some applications want the certificate bundle in a different order.

dovecot (ssl_cert_file) wants it bottom-up,

with the server certificate first in the same file.

postfix (smtpd_tls_CAfile), on the other hand,

likes it top-down.

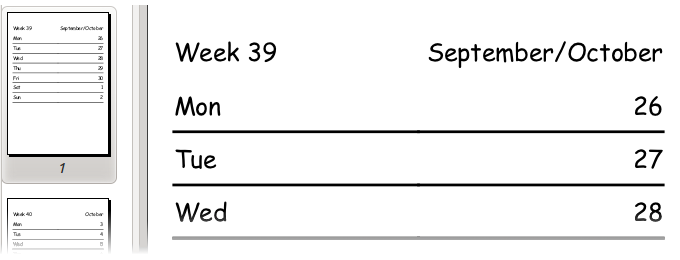

2011-10-02 - printable weekly calendar / generate

My wife wanted to create new pages for the weekly block calendar that she had made in Microsoft Excel. But she was reluctant to update all the dates by hand. Obviously.

So.. for her enjoyment, I hacked up this little CLI/CGI script that produces a PDF with one page per week for as many weeks as she likes.

The output looks like this:

Use it like this:

You might want to change more than just the date and the amount of weeks. In that case, you shall fetch the source: weekcalendar.py (view)

It uses python-pisa for simple XHTML to PDF conversion. It

can be run as standalone CLI app, so you won't need a webserver.

2011-09-12 - backtrace / without debugger

You may not always have gdb(1) at hand. Here are a couple of other options

at your disposal.

#1 Use addr2line to get the crash location

$ cat badmem.c

void function_c() { int *i = (int*)0xdeadbeef; *i = 123; } // <-- line 1

void function_b() { function_c(); }

void function_a() { function_b(); }

int main() { function_a(); return 0; }

$ gcc -g badmem.c -o badmem

$ ./badmem

Segmentation fault

No core dump? You can still get some info.

$ tail -n1 /var/log/syslog ... badmem[1171]: segfault at deadbeef ip 00000000004004da sp 00007fff8825dcd0 error 6 in badmem[400000+1000] $ echo 00000000004004da | addr2line -Cfe ./badmem function_c /home/walter/srcelf/bt/badmem.c:1

#2 Do platform specific stack wizardry

We extend the badmem.c example from above and add non-portable backtrace facilities. (Tested on an x86_64.)

$ cat poormanbt.c

#include <ucontext.h>

#include <unistd.h>

#include <signal.h>

#include <stdio.h>

#define REG_RSP 15

#define REG_RIP 16

void function_c() { int *i = (int*)0xdeadbeef; *i = 123; } // <-- line 7

void function_b(int a, int b, int c) { function_c(); } // <-- line 8

void function_a() { function_b(1, 2, 3); } // <-- line 9

void poormanbt(int signum, siginfo_t *info, void *data) {

struct ucontext *uc = (struct ucontext*)data;

unsigned long *l = (unsigned long*)uc->uc_mcontext.gregs[REG_RSP];

printf("%lx (ip) %lx (sp)\n", uc->uc_mcontext.gregs[REG_RIP], (unsigned long)l);

for (; *l; l = (unsigned long*)*l) { printf("%lx (ip) %lx (sp)\n", l[1], l[0]); }

fflush(stdout);

_exit(1);

}

int main() {

// prepare bt

struct sigaction sa = {0,};

sa.sa_sigaction = poormanbt;

sigfillset(&sa.sa_mask);

sa.sa_flags = SA_SIGINFO;

sigaction(SIGSEGV, &sa, (void*)0);

// fire badmem

function_a();

return 0; // <-- line 28

}

We have it output instruction pointer addresses from the stack at SIGSEGV.

This example uses the SA_SIGINFO flag that enables signal stack info to

be passed into the signal handler.

$ gcc poormanbt.c -g -o poormanbt $ ./poormanbt 4006ba (ip) 7fff61feb150 (sp) 4006dd (ip) 7fff61feb170 (sp) 4006f7 (ip) 7fff61feb180 (sp) 40080e (ip) 7fff61feb240 (sp) $ ./poormanbt | addr2line -Cfe ./poormanbt function_c /home/walter/srcelf/bt/poormanbt.c:7 function_b /home/walter/srcelf/bt/poormanbt.c:8 function_a /home/walter/srcelf/bt/poormanbt.c:9 main /home/walter/srcelf/bt/poormanbt.c:28

But you really don't want to do the above. Enter execinfo.

#3 Use the special purpose execinfo backtrace

This is far more portable.

$ cat libbt.c

#include <execinfo.h>

#include <unistd.h>

#include <signal.h>

#include <stdio.h>

void function_c() { int *i = (int*)0xdeadbeef; *i = 123; } // line 5

void function_b(int a, int b, int c) { function_c(); } // line 6

void function_a() { function_b(1, 2, 3); } // line 7

void libbt(int signum, siginfo_t *info, void *data) {

int i, j;

void *buffer[16];

i = backtrace((void**)&buffer, 16); // <-- line 12

for (j = 0; j < i; ++j) { printf("%p (ip)\n", buffer[j]); }

fflush(stdout);

_exit(1);

}

int main() {

// prepare bt

struct sigaction sa = {0,};

sa.sa_sigaction = libbt;

sigfillset(&sa.sa_mask);

sigaction(SIGSEGV, &sa, (void*)0);

// fire badmem

function_a();

return 0; // line 25

}

We changed poormanbt.c around a bit and are using backtrace(3)

instead of the ucontext_t. This function seems to know what it's doing.

$ gcc libbt.c -g -o libbt $ ./libbt 0x40077c (ip) 0x7f538e77baf0 (ip) 0x40070a (ip) 0x40072d (ip) 0x400747 (ip) 0x40083d (ip) 0x7f538e766c4d (ip) 0x400639 (ip) $ ./libbt | addr2line -Cfe ./libbt libbt /home/walter/srcelf/bt/libbt.c:12 ?? ??:0 function_c /home/walter/srcelf/bt/libbt.c:5 function_b /home/walter/srcelf/bt/libbt.c:6 function_a /home/walter/srcelf/bt/libbt.c:7 main /home/walter/srcelf/bt/libbt.c:26 ?? ??:0 _start ??:0

Useful? Not so often. But it's good to know these methods exist.

2011-09-06 - gdb / backtrace / running process

Sometimes you want a backtrace or a core dump from a process that you do not

want to stall. This could concern a multithreaded application of which some

threads are still doing important work (like handling customer calls).

Firing up gdb would halt the process for as long as you're

getting info, and raising a SIGABRT to get a core dump has

the negative side-effect of killing the process. Neither is acceptable in

a production environment.

In comes the handy gdb(1) option -ex. See this

hanging.c example that we will examine while leaving it running.

int c() {

while(1);

return 64;

}

int b() {

return c();

}

int a() {

return b();

}

int main() {

return a();

}

Fire it up, gather info, and keep running:

$ gcc hanging.c -o hanging -g $ ./hanging & [1] 787 $ time gdb -p `pidof hanging` -ex bt -ex 'thread apply all bt full' -ex detach -ex quit ... c () at hanging.c:2 2 while(1); #0 c () at hanging.c:2 #1 0x00000000004004d8 in b () at hanging.c:6 #2 0x00000000004004e8 in a () at hanging.c:9 #3 0x00000000004004f8 in main () at hanging.c:12 Thread 1 (process 787): #0 c () at hanging.c:2 No locals. #1 0x00000000004004d8 in b () at hanging.c:6 No locals. #2 0x00000000004004e8 in a () at hanging.c:9 No locals. #3 0x00000000004004f8 in main () at hanging.c:12 No locals. Detaching from program: /home/walter/hanging, process 787 real 0m0.128s user 0m0.120s sys 0m0.020s $ fg ./hanging

Obviously the process does hang while gdb gathers the required information, but it resumes immediately after, hopefully without your users noticing it.

You can write a core dump too, if you like. But this can require a bit more time, depending on how much memory your process is using.

# cat /proc/`pidof asterisk`/status | grep VmRSS VmRSS: 13236 kB # time gdb -p `pidof asterisk` -ex generate-core-file -ex detach -ex quit ... 0x00007f1f01e5ebd6 in poll () from /lib/libc.so.6 Saved corefile core.313 Detaching from program: /usr/local/sbin/asterisk, process 313 real 0m1.972s user 0m0.192s sys 0m0.332s # ls -lh core.313 -rw-r--r-- 1 root root 15M 2011-09-06 08:43 core.313

A couple of notes about this last example:

- RSS is only indicative of the dump size. The dump may very well turn out twice as large.

- Most of the time spent here was loading symbols. A 30MB dump won't take twice as long. An 800MB dump will take some time though. Beware.

2011-09-04 - sip / six digit port number / invalid

While looking through opensips logs of a customer, sometimes we would see the following:

ERROR:core:parse_via: invalid port number <110900> ERROR:core:parse_via: <SIP/2.0/UDP 1.2.3.4:110900;branch=z9hG4bKabcdef... ERROR:core:parse_via: parsed so far:<SIP/2.0/UDP 1.2.3.4:110900;branch=z9hG4bKabcdef... ERROR:core:get_hdr_field: bad via

As you can see, that 6-digit port number is invalid. Furthermore, when sniffing this traffic, we could see that the port number is almost right. The traffic came from port 11090 (one less zero at the end).

Not only the Via header, the Contact header too had

the extra appended zero.

The first thought — a broken SIP ALG — was too simple an explanation. It seems implausible that an ALG broken that badly would be allowed to be deployed. Unless it's a spurious error, caused by some extra environmental influence.

Recently I was at someones house and couldn't get a SIP client to register remotely. It turned out that they had this exact same issue. And that gave me a chance to investigate.

The cause, turned out that the issue was a sum of the following:

- Double NAT — a wireless router doing NAT and an ADSL-modem doing NAT.

- ALG in the Zyxel ADSL-modem doing blind replacements of Via and Contact.

- Human readable RFC1918 addresses of different sizes — in my case 192.168.1.33 for the wireless router and 192.168.2.201 for my laptop.

My SIP clients sends out Via: SIP/2.0/UDP 192.168.2.201:5060;..., the

wireless router doesn't touch it, but changes the source IP to 192.168.1.33,

the Zyxel ALG sees the SIP traffic and replaces a string of length "192.168.1.33:5060"

with 1.2.3.4:11090.

But it didn't say 192.168.1.33:5060, it said 192.168.2.201:5060!

So, the altered SIP packet gets a leftover zero from the original message.

The moral of the story: even though SIP ALGs may come in handy, too often they're not coded correctly. Failing to check the SIP packet for the source IP and simply blindly replacing it, breaks more than it fixes.

P.S. Altering your IP to be the same "size" doesn't fix things. The ALG still does

Via replacements for replies. You'll receive packets in which the top-most Via IP

is not your own — but the wireless router's — discouraging SIP clients from

accepting these replies.

2011-07-13 - openswan klips install / modules

If you want to be able to sniff your IPsec traffic with OpenSwan,

you'll need to get KLIPS instead of the default NETKEY

IPsec protocol stack.

Installing that on Ubuntu/Karmic should be a matter of:

~# apt-get install openswan-modules-source ~# cd /usr/src /usr/src# tar jxvf openswan-modules.tar.bz2 /usr/src# cd modules/openswan /usr/src/modules/openswan# make KERNELSRC=/lib/modules/`uname -r`/build module module_install

But it's not.

Right now, we're running the default Linux kernel 2.6.31-23-server on this Karmic machine. And as it happens, in 2.6.31 they removed networking compatibility code.

All drivers are already converted to new net_device_ops API and nobody uses old API anymore.

...

-#ifdef CONFIG_COMPAT_NET_DEV_OPS

- struct {

- int (*init)(struct net_device *dev);

- void (*uninit)(struct net_device *dev);

- int (*open)(struct net_device *dev);

- int (*stop)(struct net_device *dev);

- int (*hard_start_xmit) (struct sk_buff *skb,

- struct net_device *dev);

- u16 (*select_queue)(struct net_device *dev,

...

So after a couple of succesful object compilations you get this:

CC [M] /usr/src/modules/openswan/modobj26/ipsec_tunnel.o modobj26/ipsec_tunnel.c: In function ‘ipsec_tunnel_attach’: modobj26/ipsec_tunnel.c:1117: error: ‘struct net_device’ has no member named ‘set_mac_address’ modobj26/ipsec_tunnel.c:1119: error: ‘struct net_device’ has no member named ‘hard_start_xmit’

The fix: don't use the old Ubuntu supplied version.

/usr/src# git clone git://git.openswan.org/public/scm/openswan.git openswan-2 /usr/src# cd openswan-2 /usr/src/openswan-2# make KERNELSRC=/lib/modules/`uname -r`/build module module_install /usr/src/openswan-2# modprobe ipsec

/usr/src/openswan-2# ip addr | grep ipsec 4: ipsec0: <NOARP,PROMISC> mtu 16260 qdisc pfifo_fast state DOWN qlen 10 5: ipsec1: <NOARP> mtu 0 qdisc noop state DOWN qlen 10

Good! Now we have a nice interface to sniff.

2011-07-12 - mocp / random / enqueue

After disk failure on our company music server, I lost my enqueue-some-random-music-script.

That shan't happen again. So here, for my own enjoyment: autoenq.sh

#!/bin/sh

enqueue_app="mocp -a"

music_glob="*.mp3"

music_path="`dirname "$0"`"

list_path="$music_path/.autoenq.list"

if [ "$*" = "-c" ]; then

# Create list of all files

find . -type f -iname "$music_glob" > "$list_path.tmp" 2>/dev/null # no lost+found

# Create list of all dirs that have files

cat "$list_path.tmp" | sed -e 's/\/[^\/]*$//' | sort | uniq > "$list_path"

exit 0

fi

args="`echo "$*" | sed -e "s/['\\\\]//g"`" # no backslashes and single quotes please

args="`echo "$args" | sed -e 's/[[:blank:]]\+/.*/g'`" # replace blanks with .*

generator="grep -i '$args' '$list_path' | shuf"

exec 3<&0 # stdin as fd 3

eval $generator | while read -r dir; do

echo -n "Enqueue $dir [Y/n]? "

read answer <&3 # read from stdin

if [ -z "$answer" -o "$answer" = "y" -o "$answer" = "Y" ]; then

find "$dir" -maxdepth 1 -type f -iname "$music_glob" | xargs -d\\n $enqueue_app

exit 0

fi

done

Run /somewhere/autoenq.sh -c nightly to update the file (directory) list.

Example usage:

$ ./autoenq.sh ltj big bud Enqueue ./LTJ/Big Bud - Fear of Flying/CD 2 [Y/n]? n Enqueue ./LTJ/GLRMA 001 - Big Bud - Infinity & Infinity [Y/n]?

Now mocp is populated with the files from that second path.

Noteworthy parts in the code above:

- We duplicate

stdinto fd 3 in the main shell so we can use that in thewhile-subshell. If we didn't, theanswerto the question would be read from the generator as well. - The

$generatoris created earlier for extensibility, you may want to addgrep -vstuff (exclusions) for certain command line parameters in the future. - It takes care to only enqueue files, not directories. Otherwise

mocpwill recursively get directory contents, which is not what you want. (Especially not if you have a stray mp3 file in your root music directory.)

2011-07-04 - asterisk dialplan peculiarities / regex with eqtilde

In the Asterisk PBX dialplan, expressions can be formed using the $[...] syntax.

Addition, subtraction, comparison and so on are defined. As is a regex operator: =~

Unfortunately, the documentation about the details of the implementation is hard to find.

Here, a breakdown of my findings:

static struct val * op_eqtildeis defined inmain/ast_expr2.y- It uses the

REG_EXTENDEDflag when callingregcomp: so extended regular expression syntax is used. - The

REG_ICASEflag is NOT used: case sensitive. - If you don't use parentheses (substring addressing) the returned value is the amount of characters that match:

rm[0].rm_eo - rm[0].rm_so - If you do use parentheses, the returned value is the content of the outermost substring match: slice of original between

rm[1].rm_soandrm[1].rm_eo

That last bit can cause a bit of trouble. For example, you would think that the following two statements are equivalent.

exten => 333,1,Hangup(${IF($["${HANGUPCAUSE}"=~"^0$|^27$|^29$|^34$|^38$|^42$|^66$"]?${HANGUPCAUSE}:USER_BUSY)})

...and...

exten => 333,1,Hangup(${IF($["${HANGUPCAUSE}"=~"^(0|27|29|34|38|42|66)$"]?${HANGUPCAUSE}:USER_BUSY)})

They aren't. The latter one will give you an error and a Hangup() with an empty argument when ${HANGUPCAUSE}

falls outside the listed values, because the value returned to IF() is empty.

func_logic.c: Syntax IF(<expr>?[<true>][:<false>]) (expr must be non-null, and either <true> or <false> must be non-null)

func_logic.c: In this case, <expr>='', <true>='USER_BUSY', and <false>='0'

pbx.c: -- Executing [333@context:1] Hangup("SIP/somewhere", "") in new stack

The easy fix: add a LEN(). It'll look better than the first priority (extension rule) shown.

exten => 333,1,Hangup(${IF(${LEN($["${HANGUPCAUSE}"=~"^(0|27|29|34|38|42|66)$"])}?${HANGUPCAUSE}:USER_BUSY)})

2011-06-09 - executing remote command / ssh / extra escaping

If you use ssh to run commands remotely, you may have run into the problem that

you need an extra layer of escaping.

Let's say you have application myapp that for some reason only runs on host myserver.

If you have functional ssh keys to log onto myserver it can be helpful to create a

myapp wrapper on your desktop. After all, this:

$ myapp myargs

... is far more convenient than doing this:

$ ssh myserver $ myapp myargs $ logout

(Especially if you want to do stuff with stdin and stdout.)

The naive approach to /usr/local/bin/myapp is this:

#!/bin/sh ssh myserver $*

This has a number of problems:

- The arguments can interpreted as

sshoptions if they begin with a-(hyphen). Thesshclient I'm dealing with right now plays nice, but it's not a bad idea to add--before$*. $*is expanded immediately, so every argument with spaces in it is broken up. This needs fixing. And no, passing"$@"does not help. You're right in that now arguments with spaces are passed tosshas a single argument, but it still needs an extra level of escaping (*).- The remote end thinks that there is no tty on the other end, causing password inputs to break, among other things.

If you need a tty, you can fix this by adding

-tto the list ofsshoptions, the drawback being that output fromstdoutandstderris now combined intostdout.

The proper solution (add -t only if needed):

#!/bin/sh args="" for arg in "$@"; do args="$args '`echo "$arg" | sed -e "s/'/'\\\\\\\\''/g"`'"; done ssh myserver -- myapp $args

Yes, it looks like a kludge. But it forces single quotes around all your arguments and works like a charm.

(*) The extra expansion is needed to get things like ssh myserver -- rm '*.png' to work.

2011-06-08 - django / query expression / negate

Suppose you have an is_enabled boolean in your Django model.

class Rule(models.Model):

is_enabled = models.BooleanField(blank=True)

# other exciting fields here

And now imagine you want to negate the is_enabled values. Something you would

easily do in SQL, with: UPDATE myapp_rule SET is_enabled = NOT is_enabled;

The Django F-syntax is nice, and looks like it should be up for the task.

Let's sum up a couple of attempts:

Rule.objects.update(is_enabled=(not F('is_enabled')))

No! You get this:

UPDATE myapp_rule SET is_enabled = true;

Rule.objects.update(is_enabled=(True ^ F('is_enabled')))

No! You get this:

unsupported operand type(s) for ^: 'bool' and 'F'

And, as you might guess is_enabled=-F('is_enabled') and is_enabled=~F('is_enabled')

yield an error similar to the previous one.

If you're using MySQL, you just might get away with is_enabled=(True - F('is_enabled')).

But PostgreSQL will throw an operator does not exist: boolean - boolean error.

Okay. So, on to implementing the not-operator for the ExpressionNode.

No! There is no __not__() operator for object instances. But we can use __invert__()

which is called by the ~-operator (bitwise not).

Getting that to work with Django 1.2, is a matter of this:

--- django/db/models/expressions.py.orig 2011-06-08 17:28:54.647385267 +0200

+++ django/db/models/expressions.py 2011-06-08 21:12:47.607603534 +0200

@@ -20,6 +20,9 @@ class ExpressionNode(tree.Node):

AND = '&'

OR = '|'

+ # Logical operators

+ NOT = 'NOT' # unary, needs special attention in combine_expression

+

def __init__(self, children=None, connector=None, negated=False):

if children is not None and len(children) > 1 and connector is None:

raise TypeError('You have to specify a connector.')

@@ -48,6 +51,10 @@ class ExpressionNode(tree.Node):

# OPERATORS #

#############

+ def __invert__(self):

+ obj = ExpressionNode([self], connector=self.NOT, negated=True)

+ return obj

+

def __add__(self, other):

return self._combine(other, self.ADD, False)

--- django/db/backends/__init__.py.orig 2011-06-08 20:59:19.307387242 +0200

+++ django/db/backends/__init__.py 2011-06-08 21:03:19.367604113 +0200

@@ -472,6 +472,9 @@ class BaseDatabaseOperations(object):

can vary between backends (e.g., Oracle with %% and &) and between

subexpression types (e.g., date expressions)

"""

+ if connector == 'NOT':

+ assert len(sub_expressions) == 1

+ return 'NOT (%s)' % sub_expressions[0]

conn = ' %s ' % connector

return conn.join(sub_expressions)

And now this works like it's supposed to:

Rule.objects.update(is_enabled=~F('is_enabled'))

On to find out what the Django community thinks the real solution should be ;-)

2011-05-16 - mysql issue warnings / cron

I've previously written about MySQL pain in the behind issues involving views with SECURITY DEFINER and bad client collation selection.

For the former problem, I wrote a script that you could call periodically to warn you of potential problems with your views. Now I've extended it to warn you about collation issues as well.

Put warn-mysql-issues.sh (view) in your cron tab and run it periodically. It'll save you from production-time errors that you get when attempting to compare a string of one collation with another.

ERROR 1267 (HY000): Illegal mix of collations (utf8_general_ci,EXPLICIT) and (utf8_unicode_ci,EXPLICIT) for operation '='

P.S. I've found that the option skip-character-set-client-handshake in the MySQL config

works better than default-character-set = utf8 because the lack of a

default-collation-connection option can get you utf8_general_ci autoselected

instead.

2011-04-28 - linux / canon mf8350 / printer driver

Getting printer drivers for the Canon MF8350

to work under Ubuntu is a big

pain in the behind. (Installation using custom scripts that abuse both

/usr/lib and /usr/local/lib and ultimately fail to compile for obscure

reasons.)

My colleague found that the easiest way to get it to work, was converting

the RPM to DEB using alien(1). For your enjoyment, here are the two debian

packages needed for Ubuntu 10.04 (amd64):

cndrvcups-common_2.20-2_amd64.deb

cndrvcups-ufr2-uk_2.20-2_amd64.deb

They might work on other distro's or Ubuntu versions too. YMMV.

Update 2012-12-12

Look at the updated note for an updated driver.

2011-04-07 - diff / memory exhausted / udiff

Sometimes when I'm unsure what a button in a database-driven application does, I simply click it and check the differences in the database before and after the click.

If the database dumped is (somewhat) sorted and with one line per row (for MySQL use --skip-extended-insert), this can be an easy method of verifying that your application action does exactly what you expect and nothing more.

Create a pre.sql before the click, click the button and watch the

action happen. Finally, create a post.sql sql dump. Run diff(1)

on the files, and voilà: a nice readable listing of state changes.

Sometimes however, the files can be too large for regular diff to handle. See this example:

$ ls -lh pre.sql post.sql -rw-r--r-- 1 root root 286M 2011-02-23 16:55 post.sql -rw-r--r-- 1 root root 286M 2011-02-23 16:50 pre.sql $ time diff pre.sql post.sql diff: memory exhausted real 0m15.236s user 0m0.132s sys 0m2.480s

Ouch! Not enough memory. This is where udiff (microdiff or unified-diff,

whichever you prefer) comes in:

$ time ./udiff pre.sql post.sql >tmp.diff real 0m16.878s user 0m15.205s sys 0m1.372s

And you're left with a nice readable diff:

$ cat tmp.diff

--- pre.sql 2011-02-23 16:50:38.000000000 +0000

+++ post.sql 2011-02-23 16:55:03.000000000 +0000

@@ -444245,7 +444245,6 @@

INSERT INTO `billing...

INSERT INTO `billing...

INSERT INTO `billing...

-INSERT INTO `billing... (censored values)

INSERT INTO `billing...

INSERT INTO `billing...

INSERT INTO `billing...

@@ -872067,6 +872066,8 @@

INSERT INTO `django_admin_log` VALUES (4378,'2011-02-23 12:35:20'...

INSERT INTO `django_admin_log` VALUES (4379,'2011-02-23 12:35:31'...

INSERT INTO `django_admin_log` VALUES (4380,'2011-02-23 12:37:08'...

+INSERT INTO `django_admin_log` VALUES (4381,'2011-02-23 16:53:26'...

+INSERT INTO `django_admin_log` VALUES (4382,'2011-02-23 16:53:31'...

UNLOCK TABLES;

--

@@ -872226,7 +872227,7 @@

LOCK TABLES `django_session` WRITE;

INSERT INTO `django_session` VALUES ('d6b...

-INSERT INTO `django_session` VALUES ('8d7...

+INSERT INTO `django_session` VALUES ('8d7...

INSERT INTO `django_session` VALUES ('091...

UNLOCK TABLES;

Convinced? Get the udiff python application (view) and the test suite Makefile (view) now.

2011-03-31 - pcap / capture fragments / udp

When dealing with internet protocols that operate on top of UDP, fragmenting suddenly becomes a lot less uncommon.

Normally, you would only encounter fragments on TCP connections when the MTU on the sending host is larger then the MTU in any of the next hops. Hosts usually attempt to avoid fragmentation for obvious reasons. (Inefficiëncy, extra reassembly work.)

For connectionless UDP packets this is a different matter. Protocols over UDP expect packets to be single entities. This means that fragments must occur when the packet size exceeds the MTU.

Protocols like SIP (over UDP) work with packet data that can in some cases be larger than a normal MTU of 1500. Some tunnel setups drastically reduce the MTU, causing many if not all SIP packets to get fragmented.

Now, when you sniff this traffic using tcpdump(1) or ngrep(1),

the obvious filter would be: udp and port 5060. But, that would make you

miss out on these relevant fragments and ponder questions like:

How come my packets are incomplete?

Why does it still work?

The fix? Alter the filter rules to also look for the fragment header: bits 51..63 (most

of bytes 6 and 7) of the IP header contain the fragment offset. So, instead of looking

for udp, you would look for udp or (ip[6:2] & 0x1fff != 0).

ngrep -qtldeth0 -Wbyline '' '(udp and port 5060) or (ip[6:2] & 0x1fff != 0)' .. or .. ngrep -qtldeth0 -Wbyline '' '((udp and port 5060) or (ip[6:2] & 0x1fff != 0)) and host my.host.tld'

When sniffing a VoIP host, the RTP (media) data packet size will be so small that it shouldn't get fragmented and thus won't show up. The result: packet dumps with complete SIP data and nothing else.

2011-03-15 - no sql security definer / please

Have you ever had it happen that you removed a MySQL user and suddently parts of your application stopped working? Not because you removed the user that was connecting, but because you removed the user that defined the particular view or function that you were using.

I have, and it was quite stressful ;-)

We moved a slave machine to a different IP, I updated the mysql.user host

column, and BAM, the application running on the master mysql stopped working.

The cause: some views and functions had been defined through a connection that originated on the slave machine. This caused the DEFINER= to be set to that user. This user did not exist anymore for that particular IP. The result?

mysql> SELECT * FROM my_view; ERROR 1449 (HY000): There is no 'walter'@'old-host' registered

It turns out that MySQL default view/function creation is in "SQL SECURITY DEFINER" mode, for some unfathomable reason. This is like having SETUID on all your binaries by default.

The solution is to not do that. You will have to re-create your views and

functions, adding SQL SECURITY INVOKER to the definition SQL (DDL).

Run this in your cron every night to pester you that you still have some work to do:

warn-mysql-definer.sh

(view) is a little script

that checks the security type of your views and functions and spits out a warning

if you're using DEFINER-type security.

Run this before or after your MySQL backup (view) and take care of the problem before it bites you.

P.S.

The MySQL docs have this to say about the unfathomable:

The standard says that the definer of the view, which is the same as the owner of the view's schema, gets applicable privileges on the view (for example, SELECT) and may grant them. MySQL has no concept of a schema "owner", so MySQL adds a clause to identify the definer.

I think I would know it when I remove a schema owner. Removing a random user that may have had write powers in the past is not something that should trigger breakage.

2011-03-10 - build error / unixodbc / debian-squeeze

Building unixodbc-2.2.14p2 on debian/squeeze which you just fetched through apt-get source unixodbc.

~/src/unixodbc-2.2.14p2$ ./configure ... works fine ... ~/src/unixodbc-2.2.14p2$ make ... make[1]: Entering directory `/home/walter/src/unixodbc-2.2.14p2/odbcinst' make[1]: *** No rule to make target `libltdl/libltdlc.la', needed by `libodbcinst.la'. Stop. make[1]: Leaving directory `/home/walter/src/unixodbc-2.2.14p2/odbcinst' make: *** [install-recursive] Error 1

The fix: add top_build_prefix to the environment.

~/src/unixodbc-2.2.14p2$ top_build_prefix=`pwd`/ make ... success!

2011-02-27 - asterisk / nat keepalive / round robin dns

Current Asterisk (telephony software) version 1.6.2.x

(and probably 1.4 and 1.8), has an odd quirk with the qualify option.

The qualify option enables a function that checks the response times of the SIP peer. By default, it sends an OPTIONS SIP packet every 60 seconds. The quirk here, is that it sends the packet to the first A-record resolved for this peers hostname at startup (or sip reload).

This works fine in most cases when the host has only one A-record. However, if you have a sip server hostname (sip.myprovider.com) with multiple A-records for load-balancing purposes (round robin), this can cause problems.

Contact registration (using the REGISTER SIP command) is done simultaneously, but less frequently than the qualify OPTIONS. For SIP registration the A-record chosen can change at random.

What this means, is that the OPTIONS will be sent to a single IP destination for the entire running life of the asterisk server, while the REGISTERs will end up at a random server.

What this also means is that the NAT-keepalive functionality of the qualify option breaks.

When you think about how Asterisk works, it is correct: the registration of contacts is decoupled from the peer definitions where the qualify options are set. But from a practical point of view, it's bad because NAT-keepalive is broken.

A workaround: send UDP keepalives yourself (view). You don't need a response. Just sending some NULs is enough.

$ ./udp-keepalive.py sip.myprovider.com

sent '\x00\x00\x00\x00' to ('1.2.3.10', 5060)

sent '\x00\x00\x00\x00' to ('1.2.3.11', 5060)

sent '\x00\x00\x00\x00' to ('1.2.3.12', 5060)

... wait while ...

sent '\x00\x00\x00\x00' to ('1.2.3.10', 5060)

sent '\x00\x00\x00\x00' to ('1.2.3.11', 5060)

sent '\x00\x00\x00\x00' to ('1.2.3.12', 5060)

...

By the way, the Linksys SPA series VoIP phones do NUL keepalives too — empty packets in their case — if you set the NAT Keep Alive Msg to empty instead of $NOTIFY, $OPTIONS or $PING.

2011-01-21 - pruning old data / mysql / csv

It is not uncommon to have a database with records that just accumulate and accumulate over time. Think of log files, telephony billing records, traffic data and so forth.

The chances that you'll ever need this data again are very slim. And letting your database grow indefinitely is not particularly smart. Time to prune!

Two things you need to worry about while pruning your data:

- Throwing it all away without a backup doesn't feel right. But moving it to a different database is overkill. Store them as CSV. It's easy to read, easy to parse and therefore easy to reload in case you feel you went a bit far with the pruning.

- Dumping and deleting data on a live database can be disastrous for running processes. The locking can create such severe delays that the users of the database time out. Dreadful for business.

This MySQL dump-to-CSV script with pruning (view) capability takes care of these two issues for you. It dumps the tables you specify to CSV and prunes them afterwards, all up until the date you specify and keeping locking to a minimum by fetching and deleting only small bits at a time.

See this example:

$ ./mysql2csv.py --prune localhost root voip_db asterisk_cdr calldate 2010-01-01 MySQL server settings: root@localhost/voip_db Table to dump: asterisk_cdr (ordered by calldate, from begin to 2010-01-01) Filename template: "voip_db.asterisk_cdr.%Y%m.csv" Enter your MySQL password to begin dumping AND PRUNING!!! Password: Processing voip_db.asterisk_cdr (ordered by calldate) ++++++++ ...pruning

Afterwards you'll be left with asterisk_cdr records younger than (or equal to) 2010-01-01 00:00:00

and you'll get pretty .csv files for the deleted records in return.

$ ls voip_db.asterisk_cdr.200905.csv voip_db.asterisk_cdr.200906.csv voip_db.asterisk_cdr.200907.csv voip_db.asterisk_cdr.200908.csv voip_db.asterisk_cdr.200909.csv voip_db.asterisk_cdr.200910.csv voip_db.asterisk_cdr.200911.csv voip_db.asterisk_cdr.200912.csv mysql2csv.py $ head -n2 voip_db.asterisk_cdr.200906.csv id,calldate,clid,src,dst,dcontext,channel,dstchannel,lastapp,lastdata,duration,billsec,disposition,amaflags,accountcode,uniqueid,userfield 23831,"2009-06-01 07:12:25","""31900555112"" <31900555112>","31900555112","31333311199","call","SIP/22.22.22.22-c3c421ff","Local/0123456789@call_out-13fb,1","Dial","Local/0123456789@call_out/n|50|g",62,20,8,3,"","voip.example.com-1243826945.5",""

Update 2012-02-03

You may want to import these again too. mysqlimport(1) will do this

for you. If the following conditions are met:

- You need

File_privprivileges:GRANT FILE ON *.* TO YOURNAME@'%'; - The file needs to be named as the table. E.g.

/path/to/asterisk_cdr.csvif the table isasterisk_cdr. - The CSV file needs to be on the filesystem of the MySQL daemon.

Run this:

$ mysqlimport --ignore-lines=1 \

--fields-terminated-by=, --fields-optionally-enclosed-by='"' \

-h HOST -u NAME -p DATABASE /path/to/asterisk_cdr.csv

2011-01-14 - port forwarded ssh / port 22

Sometimes you need to access your source code repository-server from a new server which hasn't been whitelisted yet. You check out the source over port 22, but you can't, because traffic from new-server to 22 is rejected.

The quick solution, you know this, is ssh port forwarding. Connect to old-server and forward connections to repository-server from there.

$ ssh old-server -L1234:repository-server:22

That works. For mercurial, at least.

$ hg clone ssh://walter@localhost:1234//srv/hg/myproject myproject walter@localhost's password: requesting all changes ...

Okay, then a subversion project.

$ svn co svn+ssh://walter@localhost:1234/srv/svn/myproject/trunk myproject svn: Network connection closed unexpectedly

What?

Running a tcpdump shows that what is really is going on, is that it's attempting to connect to host localhost:1234 on port 22!

20:34:42.436283 IP (tos 0x0, ttl 64, id 38321, offset 0, flags [DF], proto UDP (17), length 60)

new-server.55451 > name-server.domain: 17380+ A? localhost:1234. (32)

Ok. So we need the forwarding on the right port. But we can hardly shut sshd down on the machine we're working on. What to do?

Selective port redirection using iptables.

Here you have to be aware that iptables does not do everything you might expect

on the lo interface. Use a phony IP instead. The REDIRECT target

ensures the traffic goes to 127.0.0.1 anyway.

$ nc localhost 22 SSH-2.0-OpenSSH_5.3p1 Debian-3ubuntu5 <-- self ^C $ nc localhost 1234 SSH-2.0-OpenSSH_5.5p1 Debian-3 <-- repository-server ^C $ sudo iptables -t nat -A OUTPUT -p tcp -d 1.2.3.4 --dport 22 -j REDIRECT --to-ports 1234 [sudo] password for user: $ nc 1.2.3.4 22 SSH-2.0-OpenSSH_5.5p1 Debian-3 <-- SUCCESS! the repository-server, on port 22 ^C

$ svn co svn+ssh://walter@1.2.3.4/srv/svn/myproject/trunk myproject walter@localhost's password: ...

That was a PITA. Enough time wasted. Time to get some actual work done ;-)